Introduction

For the past 70 years, residency programs have selected prospective residents through the National Resident Matching Program.1 For residency programs, the process of evaluating and ranking residency applicants has traditionally relied on medical school reputations and numerical data, such as standardized test scores and grades. Although scores and grades are theoretically objective and can quickly screen thousands of applications, these factors have not strongly predicted clinical performance. They are also subject to structural racism and unconscious bias.2–6

In 2022, the United States Medical Licensing Examination (USMLE) switched its Step 1 exam from a numeric metric commonly used to filter applications to a pass/fail outcome.6,7 However, the USMLE Step 2 Clinical Knowledge (CK) exam still uses a numerical score. As a result, this score may be evaluated as a metric in an initial application screen8–11 and, thus, may contribute to the same pitfalls as the historical Step 1 exam.

In 2020 and 2021, our general surgery program, located at a large tertiary care hospital in the New York Metropolitan Area, received more than 1400 applications each year to fill 7 categorical and 5 preliminary positions. This volume of applications has historically been untenable for faculty to sift through without first using automatic numerical filters and is difficult for a small group to review within a tight timeframe. Even after culling the applicant pool, we did not have a standard approach to holistically review all parts of an application, nor did we have a way to evaluate comparable, consistent, or trackable measures among reviewers. Without a standard approach and more resources, the review process would remain complicated, consume time, and risk perpetuating bias.

One strategy to expand review resources is to include residents. Residents have the capacity to help with the selection process and are a reliable resource for evaluating applications.12 They can also augment the pool of reviewers, reduce the individual reviewer workload, expand the diversity of perspective when considering applicants, and enhance engagement with their own experiences. Also, when residents are included in the review process, programs acknowledge their vital role in defining the landscape of a residency program.

In this study, we aimed to restructure the application review process to create a simplified, holistic approach that incorporates residents in evaluating a broader pool of residency applicants. Our goals were to develop a review process that is (1) fair in supporting an equitable and inclusive evaluation of applicants that limits bias; (2) feasible and easy to use; (3) accurate in assessing what we think are important qualities in trainees; (4) preferable or equal to the prior process; (5) precise in generating transparent, objective, and replicable scores with acceptable variability between scorers; and (6) non-inferior in matching from the top tier of our rank list.

METHODS

Scoring Rubric Development

We convened a committee of faculty and residents to determine selection criteria for applicants to our general surgery residency program. These criteria were based on the goals and values of the residency program, the mission of the hospital, and the core competencies of the Accreditation Council for Graduate Medical Education (ACGME). The committee agreed that ideal applicants embodied 5 core categories of equal weight: service/teaching, education/life-long learning, leadership/teamwork, research/scholarly activity, and perseverance/commitment.

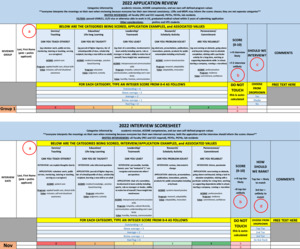

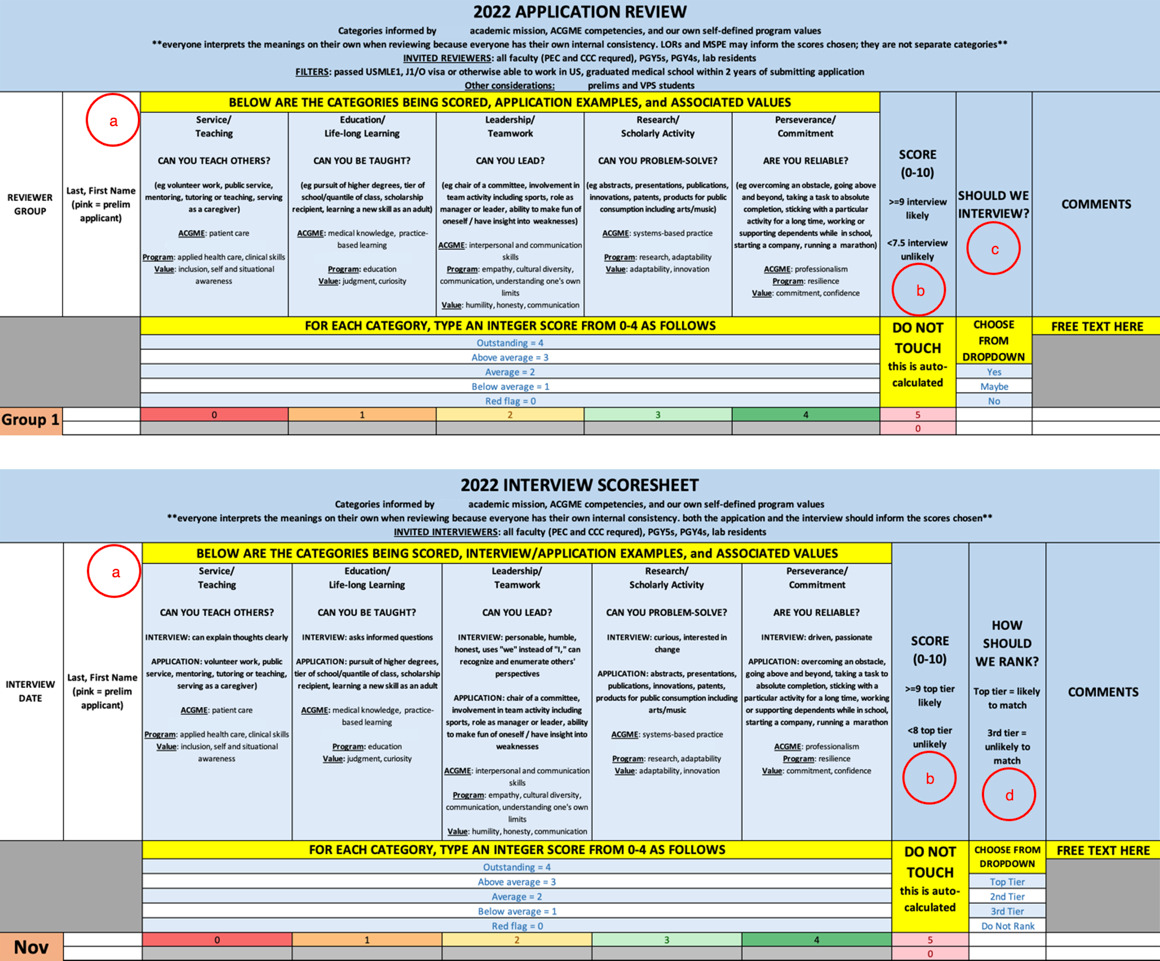

With these core categories, we developed a standardized rubric for scoring Electronic Residency Application Service (ERAS) applications and interviews. The rubric was optimized each year until the final rubric was approved in 2022 (Fig. 1). The improvements included adjusting the appearance and approach to the embedded calculations based on feedback from applicant reviewers.

On the rubric, each category was quantified on a 5-point Likert scale from “Red Flag” to “Outstanding”. Each category was also clarified with a guiding question and possible examples of candidate (1) activities and accomplishments that can be gleaned from the application and (2) behaviors that can be noted in interviews. All categories were assigned equal weight. To be fully transparent with participants, we also included our filters, reviewer composition, and scoring implications at the top of the rubric. We included an optional field “Should we interview?” to cross-check that our rubric generated commensurately high scores for applicants whom reviewers wanted to interview. To improve our final ranking strategy, we also included the optional field: “How should we rank?” (Fig. 1).

Application Reviews and Interviews

To fully represent our program, we invited both faculty and senior residents to review applications and conduct interviews. All core educational faculty were required to participate. This greater volume of reviewers ensured we could review an expanded pool of applications and that each reviewer could manage the workload (30-50 applications) despite their other clinical, academic, and administrative duties. To recognize applicants’ and reviewers’ diversity of background and experience, we assigned at least 2 reviewers to each application. To maximize the number of perspectives, applicants invited for interviews met with 3 interviewers who were different from their application reviewers. Each application had no more than 1 resident reviewer, and each applicant being interviewed had no more than 1 resident interviewer. All participants completed training on how to use the scoring rubrics. However, we were not too prescriptive with scoring to respect and embrace the different views of interviewers, and to support ranking a diverse pool of residents.

After application reviews, we consolidated scoresheets to generate a composite master score for each applicant. We predetermined that interviews would be granted to all top scorers in addition to all internal applicants and subinterns to fill a set number of interview slots. After interviews, sum scores were again calculated and averaged between interviewers for each applicant, which were then used to create a semifinal rank list. Program leadership, faculty, and senior residents then jointly discussed all data points and adjusted the list to create a final rank list for matching.

Bias Mitigation

To minimize explicit and implicit biases among reviewers and interviewers, we took several measures. Using ERAS filters, we selected all candidates who passed the USMLE Step 1 rather than setting a specific score cutoff. Also, we included any candidates who graduated medical school within the past 2 years rather than focusing only on applicants who were currently in their last year of medical school. To purposefully structure the application content seen by reviewers, we sent packets to reviewers as PDFs rather than giving reviewers direct access to ERAS. Although we could not remove the applicant names or pronouns from the packets, we omitted applicant photos, placed curriculum vitaes and personal statements at the beginning of each PDF, and moved medical school grades and USMLE exam scores to the end of each PDF. To prevent bias from seeing others’ scores and comments, we gave each reviewer separate scoresheets and discouraged them from discussing applicants before submitting their scoresheets for data compilation.

To encourage standardized interviews, we suggested value-based interview questions. Also, all interviewers completed training on implicit bias developed by our graduate medical education office. In 2020, we considered granting interviews to applicants whose scores fell below the top tier but for whom current or former faculty provided pointedly high recommendations. Given inherent bias in this loophole, we eliminated the option altogether in our second year.

Quality Analysis

To assess the quality of the selection process, we analyzed scoring rubrics for accuracy, precision, and variability between reviewers. We also assessed the feasibility and fairness of the review process by developing and distributing anonymous electronic surveys to reviewers and interviewers. Each year, we refined the scoring rubric based on our experience and reviewer feedback, which led to our final rubric in 2022 (Fig. 1). We also evaluated the match process by comparing numerical rubric scores to the final rank list and how highly we matched candidates within the rank list. This study met the criteria for Institutional Review Board exemption.

The Team

Project leadership comprised the director and associate director of the general surgery residency program. These leaders developed the concept and implementation strategy by engaging members of the Program Evaluation Committee (PEC), including core educational faculty and resident representatives. The associate program director created the scoring tool and instructions for users, iteratively improved the tool with input from reviewers, collated data, performed data analyses, and shared results with participants. The application reviewers and interviewers included all members of the PEC and Clinical Competency Committee (CCC), as well as other faculty and senior residents participating in the selection process.

Statistical Analysis

To assess differences between mean faculty and resident scores, a Welch two-sample t-test was performed using R statistical software (version 4.3.1).

RESULTS

To learn how reviewers and interviewers felt about the standardized process, we first surveyed reviewers after each application review cycle. In 2020, 12 of 21 (57%) reviewers responded to the survey; in 2021, 21 of 40 (53%) reviewers responded. In 2021, we also surveyed interviewers after the first interview day, to which 9 of 21 (43%) interviewers responded. Most of the 42 total respondents reported that the approach was fair (40 fair, 1 neutral, 1 unfair), easy to use (38 easy, 1 neutral, 3 difficult), allowed full evaluation of applicants (37 satisfied, 2 neutral, 3 dissatisfied), and accurately reflected the qualities needed for a successful resident in our program (31 yes, 9 neutral, 2 no) (Table 1). The 25 respondents who were involved in the prior application review process indicated that the standardized process was preferable or at least equal to the prior process (12 yes, 9 neutral, 4 no). When asked why the standardized process was preferable, respondents cited objectivity, organization, fairness, and categorization.

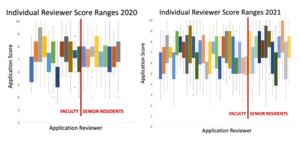

We assessed precision by evaluating variability between completed scoresheets. In 2020, reviewers’ scores were highly precise, with 91% of applications scoring within 2 points between reviewers (Fig. 2). In 2021, this precision was lower at 78%. It is pertinent to note that in 2020, the score calculation generated values from 2 to 10. In 2021, the score calculation was adjusted to generate values from 0 to 10. When reviewer scores were compared to other faculty and residents, the score distributions did not greatly differ between reviewers or among the whole group (Fig, 3, 4). Also, resident scores were similar to faculty scores and consistent with the whole group (Fig. 3, 4). Mean faculty and resident scores were not significantly different in 2020 [faculty mean, 7.21 (n = 13); resident mean, 7.2 (n = 9); P = .78] or 2021 [faculty mean, 6.72 (n = 27); resident mean, 7.17 (n = 13); P = .11].

Ultimately, we invited applicants with the highest composite scores for interviews (Fig. 5; n = 68 categorical applicants, n = 9 preliminary applicants). These composite scores did not closely correlate with the USMLE scores. We also invited applicants who met predetermined criteria (internal applicants and subinterns) and, in 2020 only, we granted interviews to a subset of applicants whose scores fell below the top tier but for whom current or former faculty provided pointedly high recommendations. These invitations led to about 100 interviews each year (2020, n = 102; 2021, n = 105).

After the interviews, we created our preliminary and final rank lists. Most top scorers (2020, 82%; 2021, 85%) remained in the top tier of the final rank list. In both years, all categorical residents matched from within the top tier of the final rank list, as in prior years.

Discussion

In this study, we standardized the application review and interview process for applicants seeking to match to our general surgery residency program. We designed this process to include valuable resident participation, mitigate bias, and focus on our program’s core values and strengths. Our process was feasible to implement, easy to use, at least as good as our prior approach, and accurate in selecting the top tier of applicants for matching. These findings support that our standardized process that incorporates residents is a valuable, holistic approach for fairly, easily, and accurately selecting residency applicants for our general surgery program.

Medical schools rely on a holistic review process to recruit future physicians that align with the school’s mission, reduce bias in applicant selection, and, ultimately, diversify the physician workforce.13–15 Residency programs may similarly benefit from a holistic selection process. The transition to pass/fail scoring on the USMLE Step 1 exam may have served as a catalyst to prompt residency programs to reevaluate their selection process and restructure it to fit the needs of a dynamic educational landscape. One method to restructure a program would be to simply use the remaining available application materials (eg, Step 2 CK score, number of publications, Alpha Omega Alpha status, letters of recommendation) for screening and selecting applicants to interview and rank.16–19 However, the USMLE score change is also an opportunity for programs to introduce a standardized, holistic review process. With such a process, residency programs can maintain the integrity of selecting highly competitive applicants with desirable, predefined attributes while recruiting a more diverse resident class.20,21

Although our review process included faculty and residents, we noticed challenges in getting these individuals to engage in educational processes. In our experience, only faculty and a select few senior residents are involved in the applicant evaluation and selection process. Although we need to expand and diversify our reviewer pool, our new standardized approach established an easy, feasible method that will help to recruit more faculty and residents to participate in reviewing residency applications.

With regard to future efforts for residency improvement, increasing overall engagement from faculty and residents in the educational process remains a challenge. Commonly, only faculty and select few senior residents are involved in the applicant evaluation and selection process. Inclusion of a diverse group of senior residents and faculty in this process should be examined as a potential means of addressing this challenge.

Our approach exemplifies a strategy for other residency programs to develop standardized processes for selecting residency applicants in their departments. Based on our experience in developing this process, we suggest that other residency programs consider the following recommendations. Before implementation, programs would benefit from engaging and aligning department faculty/leadership, creating a hierarchy of values, calculating department capacity for accommodating interviews, assembling a team of core reviewers and interviewers, distributing new score rubrics, educating reviewers, and designing metrics to evaluate quality improvement. After implementation, programs would benefit from taking steps to ensure the quality of the new process, assessing outlier scores and missing values, collecting feedback from reviewers, and using feedback to adjust the process.

CONCLUSIONS

We established a standardized, holistic process for reviewing applications, interviewing applicants, and accurately ranking applicants for matching in our general surgery residency program. This standardized process includes residents as valuable resources in the review process and acknowledges their important role in the educational landscape of our residency program. This successful standardized process is a valuable approach that other residency programs can use as a model for developing their own standardized process for selecting residents to match to their program.